Graphics Processing Units (GPUs) have revolutionized the world of computing, especially in areas that require high computational power such as deep learning, data analytics, and graphics rendering. With the rise of cloud platforms like Google Colab, users now have access to powerful GPUs and TPUs (Tensor Processing Units) for their computational tasks. In this article, we will delve into a comparative analysis of the A100, V100, T4 GPUs, and TPU available in Google Colab.

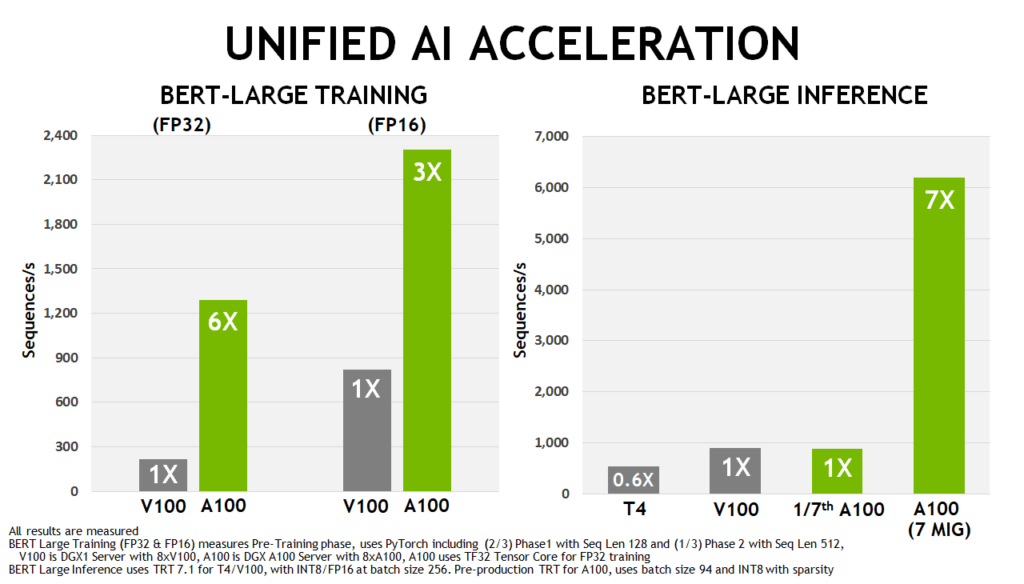

NVIDIA A100 GPU: The NVIDIA A100, based on the latest Ampere architecture, is a powerhouse in the world of GPUs. Designed primarily for data centers, it offers unparalleled computational speed, reportedly up to 20 times faster than its predecessors. Available in both 40 GB and 80 GB models, the 80 GB variant boasts the world’s fastest bandwidth at 2 TB/s. This GPU is ideal for high-performance computing tasks, especially in the realm of data science.

NVIDIA V100 GPU: The V100 is another beast from NVIDIA, tailored for data science and AI applications. With its ability to optimize memory usage, the 32 GB V100 can perform tasks equivalent to 100 computers simultaneously. However, it’s worth noting that the V100 may not be the best choice for gaming applications.

NVIDIA T4 GPU: The T4 is NVIDIA’s answer to the needs of deep learning, machine learning, and data analytics. It’s designed to be energy-efficient while still delivering high-speed computational power. While the A30 is said to be ten times faster, the T4 remains a reliable choice for specific workloads.

Google TPU: Google’s Tensor Processing Unit (TPU) is a custom-developed chip designed to accelerate machine learning tasks. Available in Google Colab, the TPU offers high-speed matrix computations, essential for deep learning models. While it’s not a GPU, its specialized architecture makes it a formidable competitor, especially for tensor-based computations.

Which One to Choose?:

- For Data Science and AI: Both the NVIDIA A100 and V100 are top contenders. The A100, with its latest architecture and unmatched speed, might edge out for most tasks. However, the V100 remains a solid choice.

- For Deep Learning and Machine Learning: The T4 is a reliable choice, but if tensor computations dominate your workload, the TPU might be more efficient.

- For Cost-Effectiveness: Google Colab offers free access to both GPUs and TPUs, but for prolonged and intensive tasks, it’s essential to consider the runtime limits and potential costs of using these resources on cloud platforms.

In conclusion, the choice between A100, V100, T4, and TPU depends on the specific requirements of the task at hand. Google Colab provides a fantastic platform to experiment and determine which suits best for your needs. As computational needs grow, it’s reassuring to know that such powerful tools are within easy reach.

About Us: At Prismanalytics, we understand the importance of high-performance computing. We offer solutions tailored to your needs, ensuring that you have access to the best resources for your tasks. Whether you’re a startup or an established enterprise, our platform is designed to provide efficiency, reliability, and speed at competitive prices. Join our growing list of satisfied customers and experience the difference.